I read Luke Stark and Jevan Hutson‘s Physiognomic AI paper last night and it’s sparked some thinking about additional reading I could add to my graduate course on statistical theory for engineering next semester (Detection and Estimation Theory).

“The inferential statistical methods on which machine learning is based, while useful in many contexts, fail when applied to extrapolating subjective human characteristics from physical features and even patterns of behavior, just as phrenology and physiognomy did.”

From the (mathematical) communication theory context in which I teach these methods, they are indeed useful. But I should probably teach more about the (non-mathematical) limitations of those methods. Ultimately, even if I tell myself that I am teaching theory, that theory has a domain of application which is both mathematically and normatively constrained. We get trained in the former but not in the latter. Teaching a methodology without a discussion of its limitations is a bit like teaching someone how to shoot a gun without any discussion of safety [*]

The paper describes the parallels between the development of physiognomy and some AI-based computer vision applications to illustrate how claims about the utility or social good arguments made now are nearly identical. They quote Lorenzo Niles Fowler, a phrenologist: “All teachers would be more successful if, by the aid of Phrenology, they trained their pupils with reference to their mental capacities.” Compare this to the push for using ML to generate individual learning plans.

The problem is not (necessarily) that giving students individualized instruction is bad, but that ML’s “internally consistent, but largely self-referential epistemological framework” cherry picks what it wants from the application domain to find a nail for the ML hammer. As they write: “[s]uch justifications also often point to extant scientific literature from other fields, often without delving its details and effacing controversies and disagreements within the original discipline.”

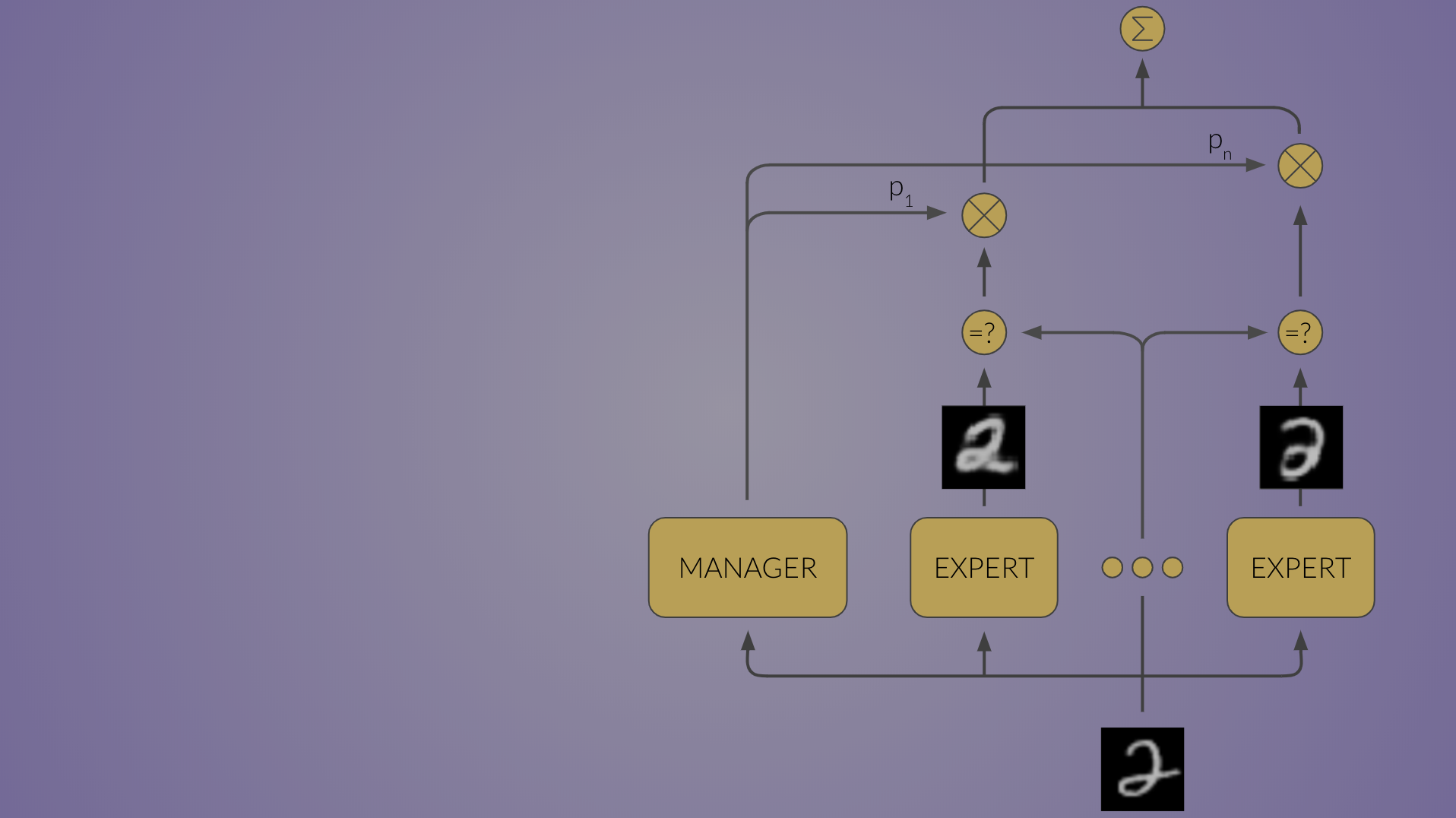

Getting back to pedagogy, I think it’s important to address this “everything looks like a nail” phenomenon. One start is to think carefully even about the cartoon examples we use as examples. But perhaps I should add a supplemental reading list to go along with each topic. We fancy ourselves as theorists, but I think it’s a dodge. Students are taking the class because they want to learn ML because they are excited about doing machine learning. When they go off into industry, they should be able to think critically about whether the tool is right for the job: not just “is logistic loss the right loss function” but “is this even the right question to be asking or trying to answer?”

[*] That is, very American?