OpenAI recently introduced GPT-3, the largest language model to date with 175 billion parameters. In this blog post, I will summarize the important points for those familiar with language models who want to understand the key aspects of this work without having to read the full 72-page paper.

The authors of GPT-3 highlight the limitations of fine-tuning using task-specific datasets, as acquiring these datasets can be challenging and fine-tuning can lead to poor out-of-distribution performance. The authors propose “in-context learning,” which involves providing the model with a task specification or a few task demonstrations as a prompt. This primes the model to focus on the specific task and adapt quickly to it. The assumption is that the model learns a diverse set of skills and pattern recognition abilities during training and utilizes them during inference to recognize or adapt to the desired task.

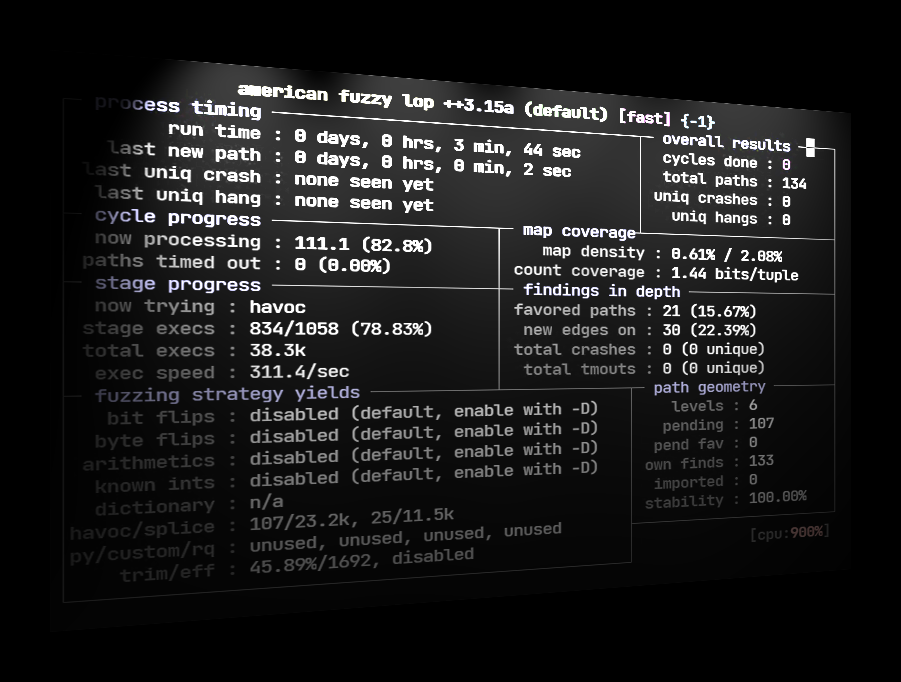

Bigger models are expected to have better in-context capabilities as low perplexity (prediction uncertainty) is generally associated with better performance on downstream tasks. The authors conducted experiments where the model had to remove random symbols from a word, varying the number of in-context examples provided. The results showed that the prompt played a significant role, especially when the number of examples was low.

GPT-3’s architecture is similar to GPT-2, with some modifications. It uses a Transformers-based architecture and incorporates dense and locally banded sparse attention patterns in the layers, similar to the Sparse Transformer. The authors trained GPT-3 in different sizes, ranging from 125 million parameters to 175 billion parameters, to analyze the correlation between model size and benchmark performance.

To improve the quality of the datasets, the authors filtered the CommonCrawl dataset based on similarity to high-quality reference corpora, performed deduplication to remove redundancy, and added known high-quality corpora to the training mix.

The authors evaluated GPT-3 on various NLP benchmarks and found promising results. For example, GPT-3 achieved state-of-the-art performance on the LAMBADA language modeling task, surpassing the previous record. It also outperformed the SOTA in closed book question answering, suggesting that larger models continue to absorb knowledge as their capacity increases. However, GPT-3 showed weaknesses in tasks involving sentence comparison and news article generation, indicating areas for improvement.

As model size increases, the risk of memorization also increases. The authors acknowledged the challenge of detecting test contamination from internet-scale datasets and attempted to mitigate it by removing documents with overlap with the test set. However, due to a bug, some contamination remained, but the results overall appeared valid.

While GPT-3 exhibits improvements over previous models, it still has weaknesses such as repetition, coherence loss in long passages, and contradiction. The choice to use an autoregressive language model instead of a bidirectional model like BERT might be a contributing factor to some of these weaknesses. Moving forward, training a bidirectional model at the scale of GPT-3 or exploring bidirectional models with few-shot learning is a promising direction for research.

There are also fundamental limitations in the pretraining objective of autoregressive and bidirectional models. Making the pretraining task better and grounding the model in other domains like video or real-world interaction could lead to improvements. Additionally, improving pretraining sample efficiency and exploring goal-directed actions rather than just predictions are important areas for future work.

Lastly, the size of GPT-3 poses practical challenges, and distillation techniques could be explored to address this. Overall, GPT-3 represents a significant advancement in language models, but further research is needed to overcome its limitations and enhance its capabilities.